Notes on Arm Mali

1. Why this page

Arm's Mali GPUs have rich docs and code, making them fun to hack/mod. This page documents what we learnt about Mali during our research. Hopefully it will help others.

Disclaimer: Many contents are from Arm's official documents and blogs. We do our best to attribute their origins. Much info is based on our own guess work & code reading.

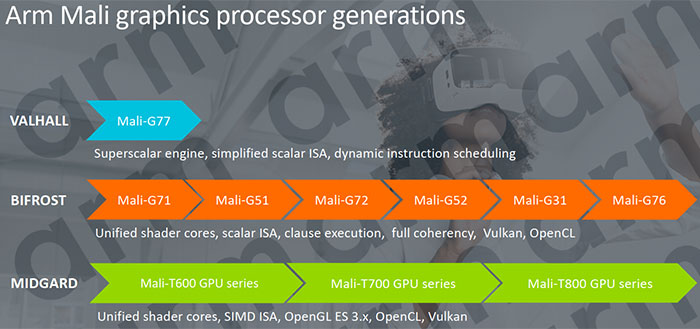

2. Mali generations

Mali-400 (Allwinner A20/A64 boards)

Mali-450 (Utgard; HiKey)

Mali-T6x0 (Midgard; Juno, Firefly, Chromebook, Odroid XU3; Odroid XU4)

Mali-T7x0 (Midgard; Firefly, Tinkerboard, Chromebook)

Mali-T8x0 (Midgard; Firefly 2, Chromebook)

Mali-G71 (Bifrost Gen1; Hikey960)

Mali-G72 (Bifrost Gen2; Hikey970)

Mali-G31 (Bifrost Gen1; Odroid HC4; Odroid C4)

Mali-G52 (Bifrost Gen2; Odroid N2)

"MPx" -- there are X GPU cores

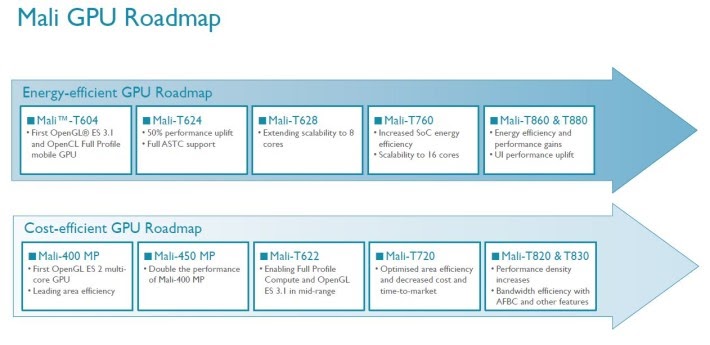

The roadmaps from Arm

|

|

3. COTS boards

| boards | buy? | CPU | GPU | OPTEE? | OS Support? |

|---|---|---|---|---|---|

| Hikey | N arrow | Aarch64 | Utgard | Y | Debian/AOSP |

| Hikey960 | N arrow | Aarch64 | Bifrost G71 MP8 | Y | Debian; AOSP (as refboard) |

| Hikey970 | N | Aarch64 | Bifrost G72 MP12 | N link | AOSP |

| Odroid Xu4 | Y | A15 | Midgard Mali T628 MP6 | N | Debian Good |

| Firefly 2 (rk3288) | Y | A17 | Midgard | Y | Debian? Unclear |

| Odroid C4/HC4 | Y | Aarch64 | Bifrost G31 MP2 (wikipedia) ~20Gflops/core?) | ||

| Odroid N2 | Y | Aarch64 | Bifrost G52 MP4 (~30Gflops/core?) |

ARM's official stack:

Linux/Debian -- OpenGL ES; OpenCL; No OpenGL.

AOSP -- OpenGL (?), Vulkan, OpenCL(?)

ODROID C4

ODROID-HC4 (same CPU) Mali G31 (Bifrost Gen1) at 650Mhz

"Ultra efficient", low cost https://developer.arm.com/ip-products/graphics-and-multimedia/mali-gpus/mali-g31-gpu

ODROID N2

Mali G52 6EE?? (Bifrost Gen2), "Mainstream"

ODROID XU4

http://www.hardkernel.com/main/products/prdt_info.php?g_code=G143452239825&tab_idx=1 Mali T628 MP6 (OpenGL ES 3.0/2.0/1.1 and OpenCL 1.1 Full profile seems to have pretty good Linux desktop support http://ameridroid.com/t/xu4

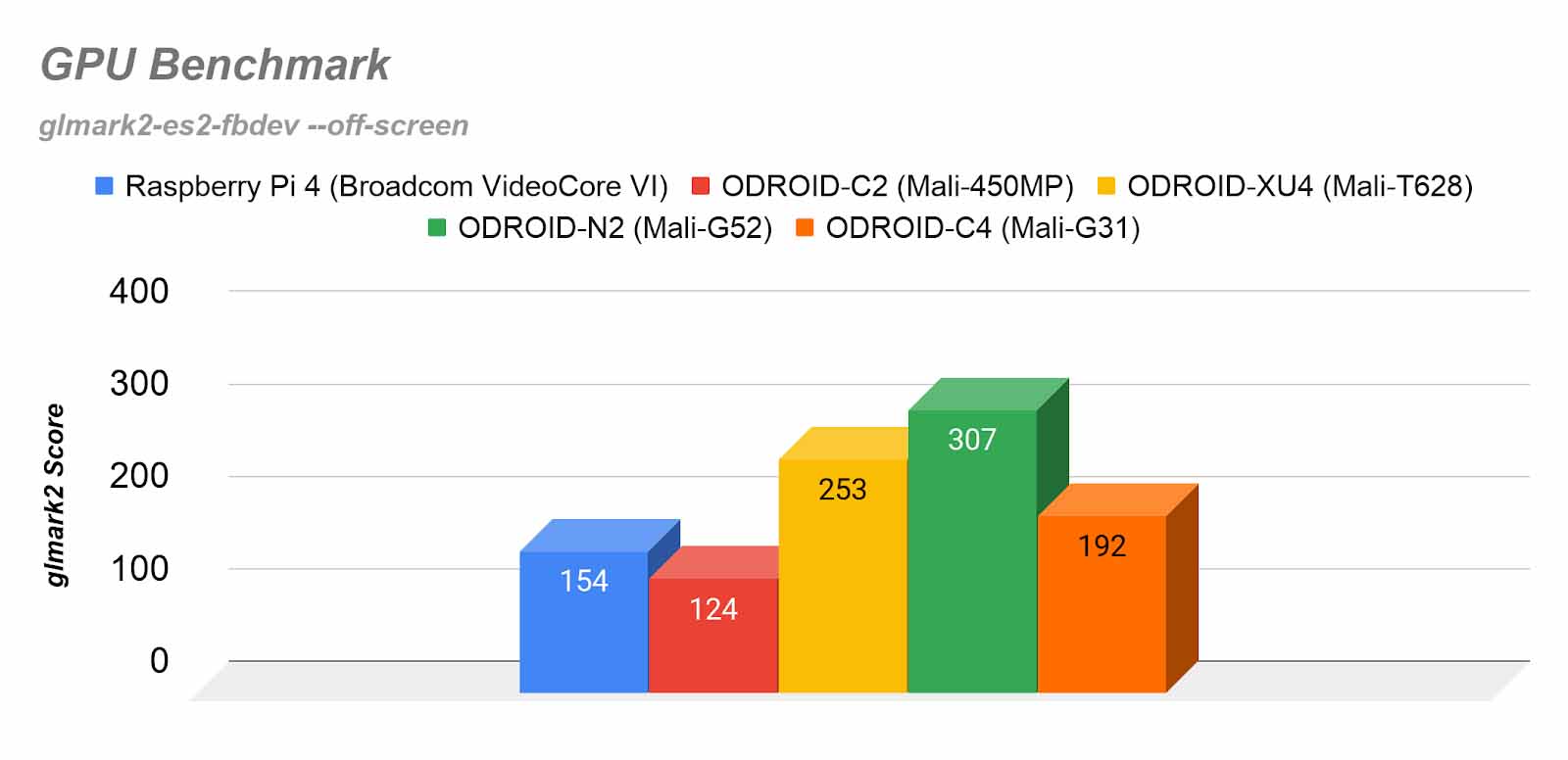

(credits: Odroid) Note: XU4 GPU performance still far exceeds Rpi4/G31. Woohoo!

(credits: Odroid) Note: XU4 GPU performance still far exceeds Rpi4/G31. Woohoo!

Hikey970

ARM Mali-G72 MP12 GPU. MP12 -- 12 cores

AOSP support. Debian supports seems pretty sparse.

[Dec 2020] out of stock everywhere… . does not look promising

Hikey960

SoC: Kirin 960 [Mar 2020] Seems out of stock everywhere. Bad.

Mali G71 MP8, Bifrost arch. MP8 -- 8 cores

Kernel tree 4.19.5 (hikey960-upstream-rebase) - custom 96boards hikey kernel on Debian

- Video does not work; no fbdev and hdmi support, known issue

Vulkan only on AOSP https://www.96boards.org/blog/vulkan-hikey960/

Debian

Heejin's build instructions: https://docs.google.com/document/d/1nP2pqNe4sJMY_mg0hksEFs6OayGto4XJeKht09zTmRU/edit

4.19 kernel with a prebuilt image https://wiki.debian.org/InstallingDebianOn/96Boards/HiKey960 [status] Mali driver downloaded as source from Arm's official website. Built from source and insmod. Works for compute (OCL); no graphics support.

AOSP

Prebuilt AOSP image. Prebuilt 4.19 kernel. GFX working. OCL: unknown but presumed to be ok. Android kernel -- the Bifrost driver seems older than the official one? https://source.android.com/setup/build/devices

Hikey620

T450mp4. (Utgard) kernel support for mali integrated for AOSP (but not for debian -- probably not a primary goal of linaro)

most recent debian kernel does NOT integrate mali driver (devices/gpu/mali) https://github.com/Linaro/documentation/blob/master/Reference-Platform/Releases/RPB_16.06/ConsumerEdition/HiKey/BFSDebianRPB.md

Android’s support for GPU is pretty good https://source.android.com/source/devices#620hikey

4. Hardware internals

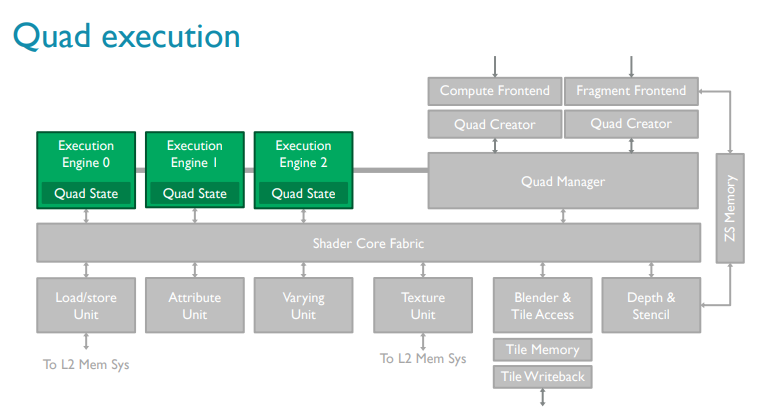

The building block is a "quad". A quad execution engine -- Arm's fundamental building block

G71: 4-wide SIMD per quad; 3x quads per core. 12 FMAs at the same time. These 3 quads are managed by a core's quad manager.

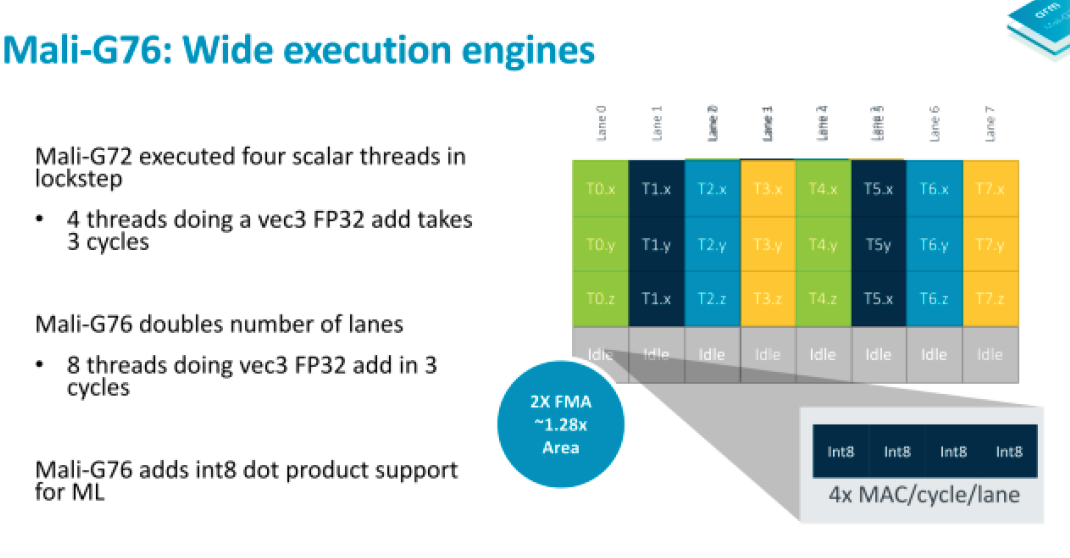

G76: 8-wide SIMD per quad, 3 quads per core.

Used to be a "narrow wavefront" (before G76)

Midgard: VLIW -- extracting 4-way ILP from each thread. Bifrost -- SIMD grouping 4 threads together

Anandtech's writeup is particularly useful:

In both the Mali-G71 and G72, a quad is just that: a 4-wide SIMD unit, with each lane possessing separate FMA and ADD/SF pipes. Fittingly, the width of a wavefront at the ISA-level for these parts has also been just 4 instructions, meaning all of the threads within a wavefront are issued in a single cycle. Overall, Bifrost’s use of a 4-wide design was a notably narrow choice relative to most other graphics architectures.

This is a very interesting change because, simply put, the size of a wavefront is typically a defining feature of an architecture. For long-lived architectures, especially in the PC space, wavefront sizes haven’t changed for years.

Bifrost

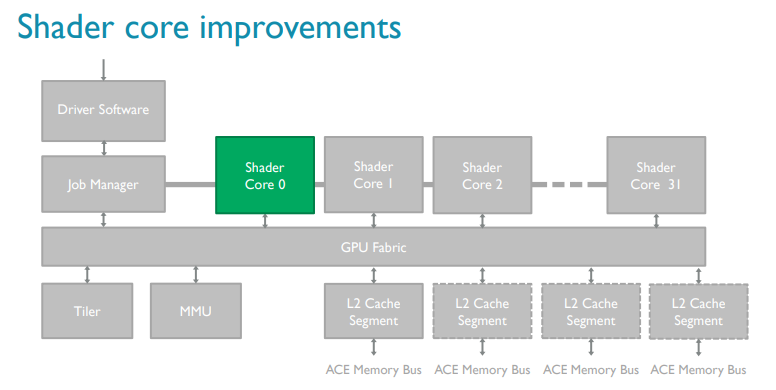

Arm's slides:

|

|

|

From Arm's blog (italic fonts added later)

Mali GPUs use an architecture in which instructions operate on multiple data elements simultaneously. The peak throughput depends on the hardware implementation of the Mali GPU type and configuration. Mali GPUs can contain many identical shader cores. Each shader core supports hundreds of concurrently executing threads.

Each shader core contains:

• One to three arithmetic pipelines or execution engines.

• One load-store pipeline.

• One texture pipeline.

OpenCL typically only uses the arithmetic pipelines or execution engines and the load-store pipelines. The texture pipeline is only used for reading image data types. In the execution engines in Mali Bifrost GPUs, scalar instructions are executed in parallel so the GPU operates on multiple data elements simultaneously. You are not required to vectorize your code to do this.

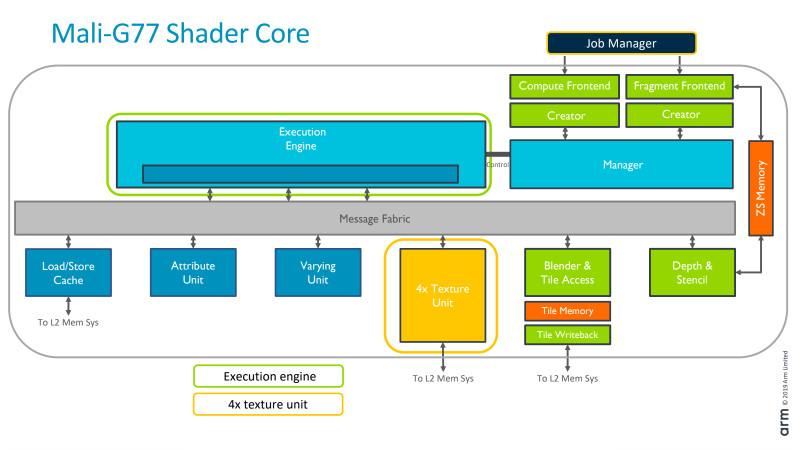

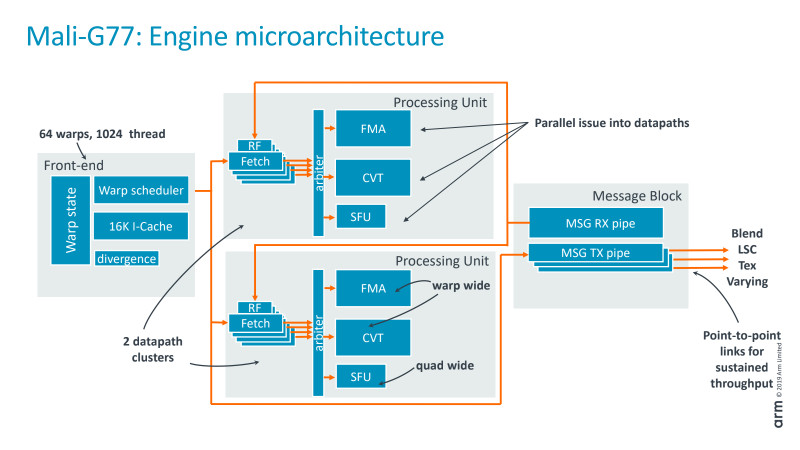

G77 (Valhalla)

No longer "Quad engine". much wider.

2x processing units per core

|

|

5. Resources

5.1 Projects

PAtrace -- useful tools for Mali GLES record & replay https://github.com/ARM-software/patrace

Panfrost https://xdc2018.x.org/slides/Panfrost-XDC_2018.pdf

5.2 Articles

-

good blog series. esp on Bifrost internals https://community.arm.com/developer/tools-software/graphics/b/blog/posts/the-mali-gpu-an-abstract-machine-part-4---the-bifrost-shader-core

-

The lima wiki provides lots of good info about Mali (may be outdated) http://limadriver.org/T6xx+ISA/

Mali hardware (including 400) https://limadriver.org/Hardware/

Lima driver arch (reverse enginerring, hw/sw interaction ... can be useful) https://people.freedesktop.org/~libv/FOSDEM2012_lima.pdf

-

T880 internals (good -- the GPU was used in mediatek x20) https://www.hotchips.org/wp-content/uploads/hc_archives/hc27/HC27.25-Tuesday-Epub/HC27.25.50-GPU-Epub/HC27.25.531-Mali-T880-Bratt-ARM-2015_08_23.pdf

-

G71, Bifrost. Good https://www.hotchips.org/wp-content/uploads/hc_archives/hc28/HC28.22-Monday-Epub/HC28.22.10-GPU-HPC-Epub/HC28.22.110-Bifrost-JemDavies-ARM-v04-9.pdf

-

“ARM Mali GPU Midgard Architecture”. Good slides on Midgard (2016. good) http://fileadmin.cs.lth.se/cs/Education/EDAN35/guestLectures/ARM-Mali.pdf https://www.hotchips.org/wp-content/uploads/hc_archives/hc28/HC28.22-Monday-Epub/HC28.22.10-GPU-HPC-Epub/HC28.22.110-Bifrost-JemDavies-ARM-v04-9.pdf

-

Mali’s shader core: unified, for vertex/fragment/compute, varying 1--16. shared L2: 32--64KB http://www.anandtech.com/show/8234/arms-mali-midgard-architecture-explored/7

-

Midgard GPU arch (overview) http://malideveloper.arm.com/downloads/ARM_Game_Developer_Days/PDFs/2-Mali-GPU-architecture-overview-and-tile-local-storage.pdf

-

ARM mali officially supported boards (old?) https://developer.arm.com/products/software/mali-drivers/user-space inc. T760MP4 http://en.t-firefly.com/en/firenow/firefly_rk3288/

-

Linux’s support for Mali https://wiki.debian.org/MaliGraphics

-

Blogs on Mali driver internals (in Chinese, verbose. somewhat useful) https://jizhuoran.gitbook.io/mali-gpu/mali-gpu-driver/she-bei-zhu-ce-gpu-register

6. The kernel driver

6.1 Acronyms

Kbdev -- kbase device. This corresponds to a GPU

Kctx -- a GPU context (?)

GP -- geometry processor

PP -- pixel processor

group -- A render group, i.e. all core sharing the same Mali MMU. see struct mali_group

kbase -- the kernel driver instance for midgard

TLstream -- timeline stream (for trace record)

js - job slot. As exposed by GPUs

jc - job chain.

Jd - job dispatcher (in the driver)

AS - address space (for GPU)

LPU -- Logical Processing Unit. For timeline display only (?)

6.2 Overview

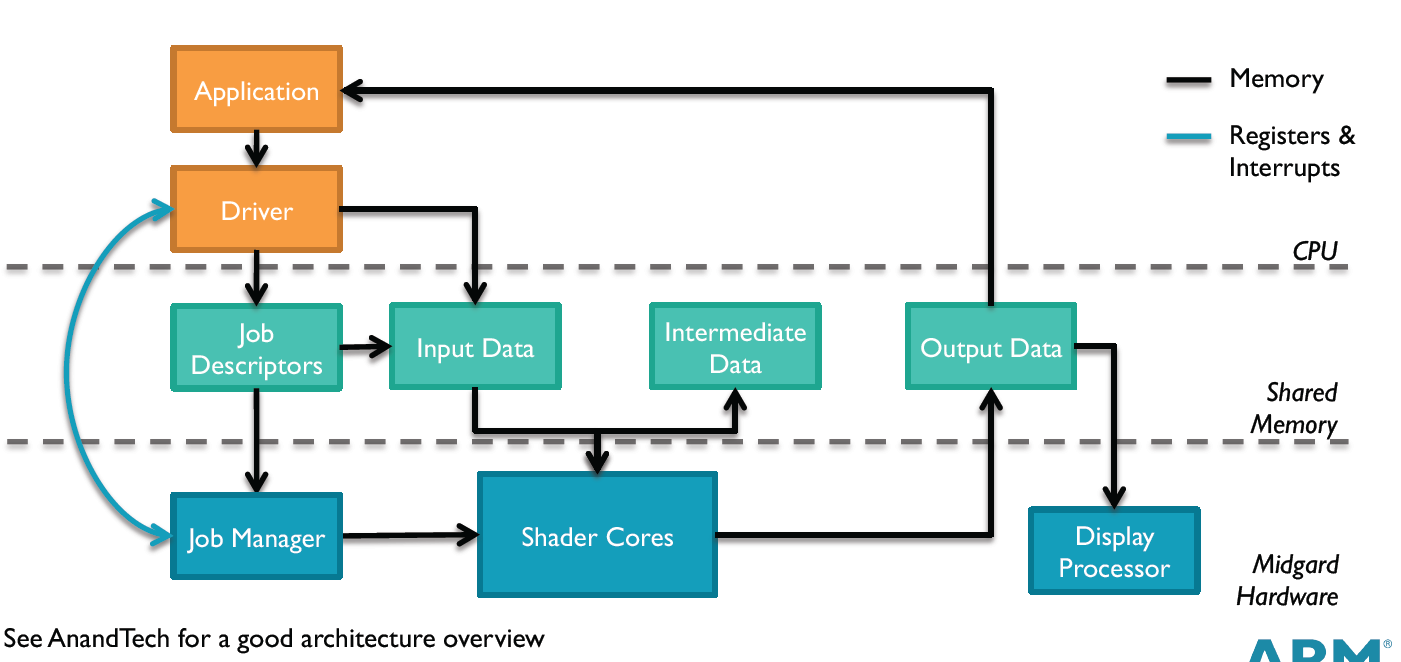

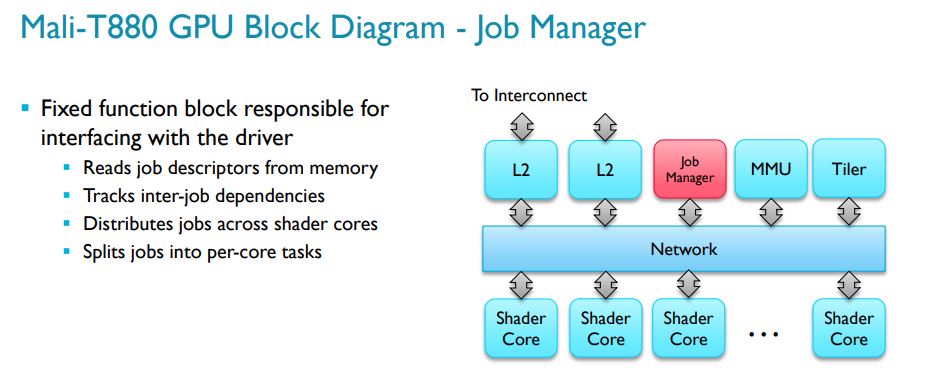

6.3 Job chains

A job chain: a binary blob of GPU executable and its metadata. Wrapped in atom structure. Bet u/k, "struct base_jd_atom_v2" is used, see mali_base_kernel.h. bet k/hw "struct kbase_jd_atom" is used, check this in "mali_kbase_defs.h".

kbase_jd_atom appears to be kernel's internal d/s. not shared with hw. Just to separate from the u/k interface (atom_v2), easy to change.

(base_jd_atom_v2.jc / < job-chain GPU address /)

The former one passed by user and the latter is the driver's internal abstraction. Both have jc in their structure which points GPU kernel instructions I guess.

One can start from kbase_api_job_submit() in "mali_kbase_core_linux.c" which invoked when the job submitted by user space.

As both structure jc, the gpu instructions might be not different from each other. Both are baremetal. When compiling OCL kernel, we need to specify GPU model or the CL runtime checks the available GPU in the system.

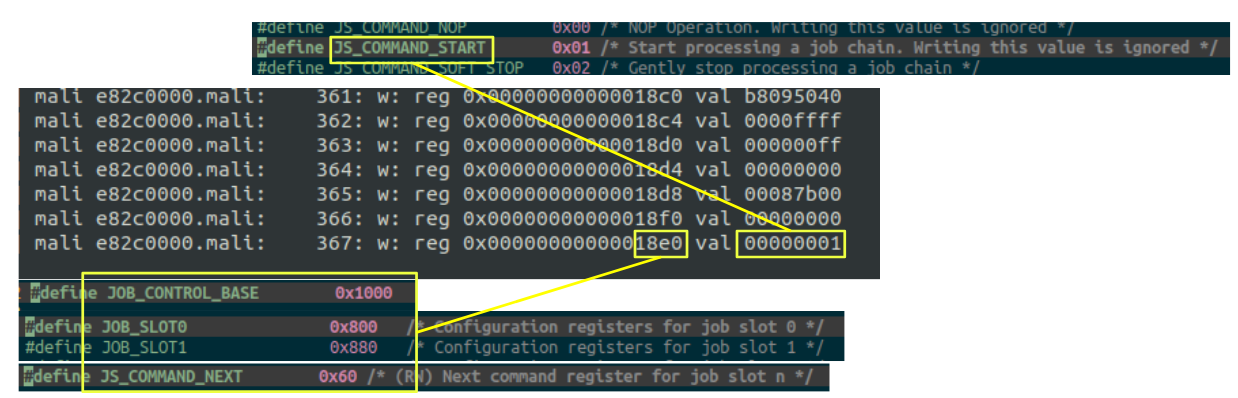

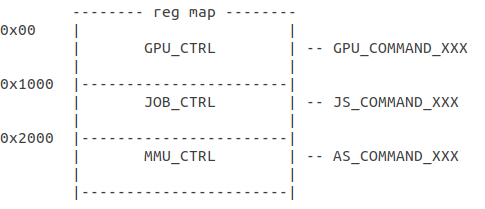

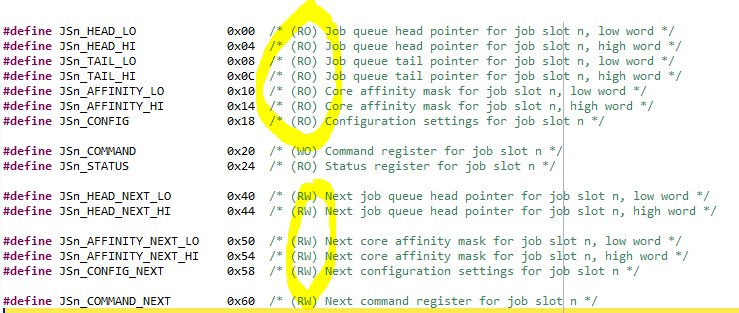

6.4 The register interface

6.4.1 Overview

Primarily, there are 3 types of registers (CPU Control, Job Control, MMU Management). GPU_CTRL: GPU Control JOB_CTRL: JOB Control MMU_MGMT: MMU Control

Note each type may have multiple instances. e.g. we may have a one GPU command register, 3 JS command registers, 8 AS command registers. 3 JS (job slot) means you have three types of jobs e.g. shader, tiler, ... 8 AS are for multiple address spaces used by GPU, basically # of page tables the GPU can hold. Normally we only use a single page table since we do not generate multiple contexts.

Three types of commands can be written to their corresponding reg types: GPU_COMAMND: GPU-related (e.g. soft reset, performance counter sample, etc.) JS_COMMAND: Job-related (e.g. start or stop processing a job chain, etc.) AS_COMMAND: MMU-related (e.g. MMU lock, broadcast, etc.)

From Arm's blog on command execution:

"The workload in each queue is broken into smaller pieces and dynamically distributed across all of the shader cores in the GPU, or in the case of tiling workloads to a fixed function tiling unit. Workloads from both queues can be processed by a shader core at the same time; for example, vertex processing and fragment processing for different render targets can be running in parallel"

6.4.2 Code

Mali_kbas_device_hw_.c

- kbase_reg_write()

- kbase_reg_read()

Reg definition: Mali_kbase_gpu_regmap.h

6.4.3 Reg map

6.5 Job slots

This is the CPU/GPU interface.

A job slot pertains to a job type. e.g. SLOT1 is for Tiling/Vertex/Compute. Slot 1 is default one.

Even the test application seems not to have any tiling/vertex, the atom comes from user runtime is not marked BASE_JD_REQ_ONLY_COMPUTE.

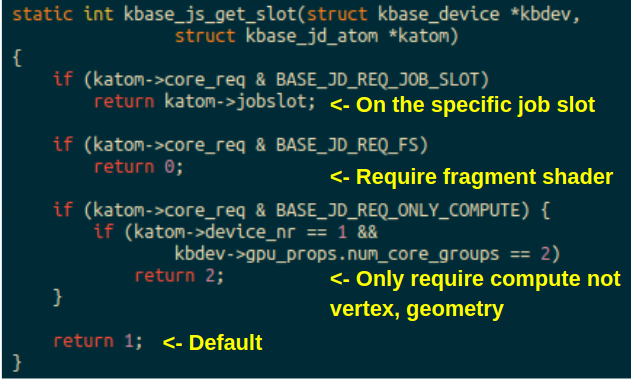

Kernel code below:

UPDATE: if job requires compute shader, there seems two types of request types

- BASE_JD_REQ_CS - guess used by OpenGL compute?

- BASE_JD_REQ_ONLY_COMPUTE - this is what we've got from OpenCL compute kernel

In the test application (OpenCL), the atom comes from user run time indeed has BASE_JD_REQ_ONLY_COMPUTE req type. However, the device_nr and num_core_groups are not matched with the const numbers in the condition and thus return SLOT1.

BASE_JD_REQ_ONLY_COMPUTE device_nr: 0 num_core_groups: 1

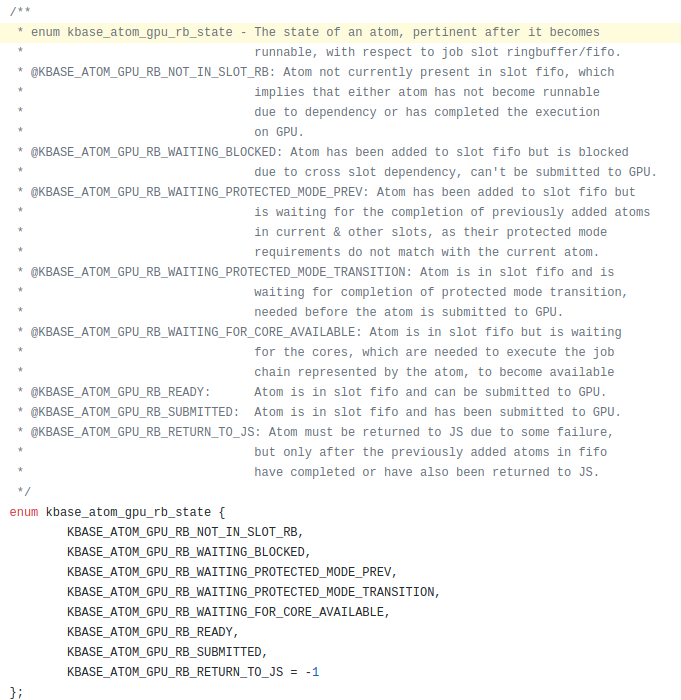

6.5.1 The ringbuffer inside a job slot

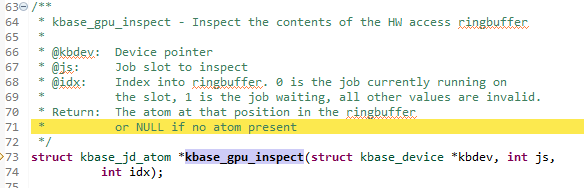

GPU is aware of the "current" and "next" jobs. A ringbuffer (rb) seems managed by driver. The ringbuffer seems to track the "current" and "next" jobs on GPU. A ringbuffer can hold 2 jobs max. The driver puts jobs it intends to submit next, or already submitted, in the ringbuffer.

Device driver manages jobs (atoms) by putting them into ring buffer (atoms and ring buffers managed by device driver).

Basically, device driver pulls runnable atom from queue and writes it to hardware job slot register, then kick GPU.

So ringbuffer is managed by device driver and a next atom to run is determined by device driver. It seems GPU only cares about atom written to job slots (check the next job slot and command, then do the things based on command).

The driver checks out atom state first and then put it into job_slot register.

Code snippet

A ring buffer: two items. For current and next jobs.

-

regs for "next" job are R/W (==> the driver can modify them since the job is not kicked yet)

-

regs for "current" job are R/O (==> the job is being executed on GPU. the driver cannot do anything about the job)

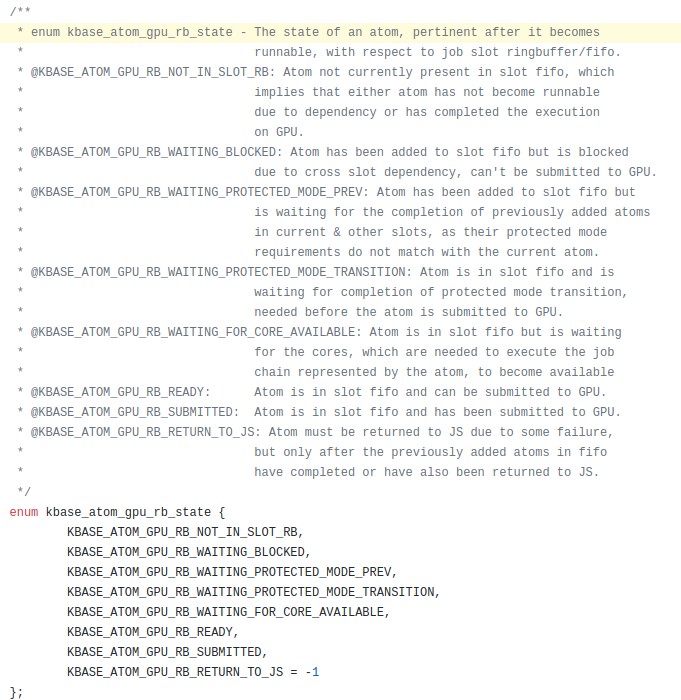

6.5.2 Ringbuffer slot state

This is well commented

More evidence from the NoMali project (see jobslot.hh)

6.6 Memory management

6.6.1 Memory group manager

On hikey960, this is in mali_kbase_native_mgm.[c|h]

* kbase_native_mgm_dev - Native memory group manager device

* An implementation of the memory group manager interface that is intended for

* internal use when no platform-specific memory group manager is available.

* It ignores the specified group ID and delegates to the kernel's physical

* memory allocation and freeing functions.

Note this code is generic (drivers/base), not quite Mali specific. In odroid-xu4 v4.15, there's not such a component (??) Absent in Hikey960 android kernel (4.19)

Seems the "root" of mm allocation.

memory_group_manager.h

struct memory_group_manager_device - Device structure for a memory group

Since it is a "device structure"? Caan there be such hardware?

It defines a bunch of memory_group_manager_ops to be implemented… plat specific?

Kbdev->mgm_dev

6.6.2 Memory pool vs. memory group manager

One pool may contain pages from a specific memory group

Group id?

A physical memory group ID. The meaning of this is defined by the systems integrator (???)

A memory group ID to be passed to a platform-specific memory group manager

- Pool -- a frontend of memory allocation

- Memory group manager -- the backend; platform specific. May or may not present (if not, just a sw impl?)

cf: drivers/memory_group_manager/XXX

"An example mem group manager" This seems a sw-only implementation.

From kernel configuration: "This option enables an example implementation of a memory group manager for allocation and release of pages for memory pools managed by Mali GPU device drivers."

The example instance will be init'd during "device probe time". Because it emulates a hw device (??)

There's also "physical mem group manager"? See the devicetree binding memory_group_manager.txt

6.7 Tracing

mali_kbase_tracepoints.[ch] useful. note they are auto-gen by some python code, missing in the kernel tree.

There’s also debugfs support (MALI_MIDGARD_ENABLE_TRACE)

6.7.1 Mali_kbase_tracepoints.c

These seem all streamline events. Where do they go? via u/k buffer to Streamline?

/* Message ids of trace events that are recorded in the timeline stream. */

enum tl_msg_id_obj {

KBASE_TL_NEW_CTX,

KBASE_TL_NEW_GPU,

#define OBJ_TL_LIST \

TP_DESC(KBASE_TL_NEW_CTX, \

"object ctx is created", \

"@pII", \

"ctx,ctx_nr,tgid") \

6.7.2 Timeline stream abstraction

See very well documented

mali_kbase_tlstream.h

Who will consume the stream?

6.7.3 Timeline infrastructure

Mali_kbase_timeline.c

mali_kbase_timeline.h

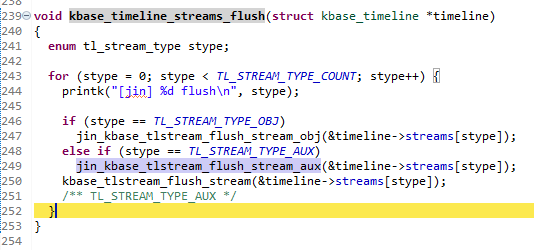

kbase_timeline_streams_flush(). To be invoked by user? It appears timeline shares a buffer with userspace? Flush to userspace? How is this done?

KBASE_AUX_PAGESALLOC

Invoked upon phys page alloc/free…. Indicating changes of pages

6.7.4 Streams

Two types of "streams". AUX and OBJ. Seems: _obj is for obj operation, like creating context, MMU. _aux is for other events, like PM, page alloc, etc.

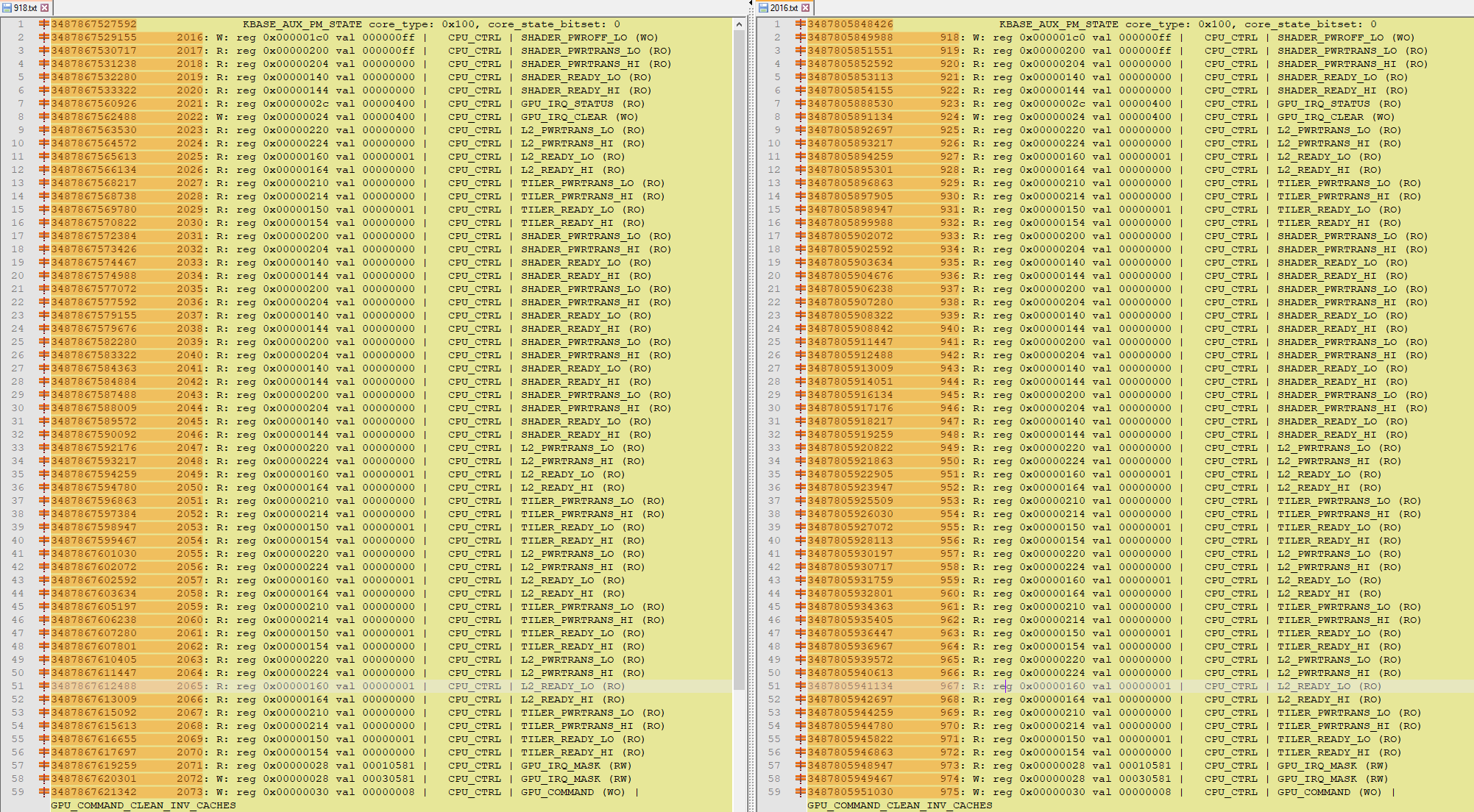

6.7.5 Sample traces dumped from the driver

6.8 GPU virtual memory

6.8.1 Top level funcs:

Mali_base_mmu.h // well written

6.8.2 MMU

GPU MMU is programmed by CPU.

Looks like a 4 level pgtable?? (this is for Bifrost.. They didn’t change the macro names) #define MIDGARD_MMU_TOPLEVEL MIDGARD_MMU_LEVEL(0) #define MIDGARD_MMU_BOTTOMLEVEL MIDGARD_MMU_LEVEL(3) See kbase_mmu_teardown_pages()

For Midgard, 2 levels.

Update hw mmu entries??

kbase_mmu_hw_configure, called by mmu_update(), by kbase_mmu_update

Reg defs

see mali_kbase_gpu_regmap.hMax 16 addr spaces

D/S

struct kbase_mmu_table - object representing a set of GPU page tables. @pgd -- pgtable root (hw address)

struct kbase_mmu_mode - object containing pointer to methods invoked for programming the MMU, as per the MMU mode supported by Hw.

struct kbase_as - object representing an address space of GPU.

One kbase_device has many (up to 16) kbase_as struct kbase_as as[BASE_MAX_NR_AS];

Each @kbase_context has a @as_nr, which seems to point to the as.

Code

drivers\gpu\arm\midgard\mmu

High level MMU function kbase_mmu_flush_invalidate_noretain()

(..goes into…) The actual hw function operating MMU: Mali_kbase_mmu_hw_direct.c --> kbase_mmu_hw_do_operation(), etc.

Pgtable

Allocate page table for GPU kbase_mmu_alloc_pgd()

Kernel threads

Workqueue mali_mmu page_fault_worker bus_fault_worker

Address space

This is like "segment". One addr space seems a tree of pgtables. The actual count of addr spaces…. Supported by MMU hardware

* @nr_hw_address_spaces: Number of address spaces actually available in the

GPU, remains constant after driver initialisation.

* @nr_user_address_spaces: Number of address spaces available to user contexts

// 8 for G71

See kbase_device_as_init()

// desc for one addr space…

struct kbase_mmu_setup {

u64 transtab; // translation table? See AS_TRANSTAB_BASE_MASK

u64 memattr;

u64 transcfg; // translation cfg? See AS_TRANSCFG_ADRMODE_AARCH64_4K etc.

};

Configuration kbase_mmu_get_as_setup()

mmap path

Right, at that time that only allocate page for GPU pgtable only.

Think mapping happen when mmap() is called, which invokes kbase_mmap() and t thus kbase_context_mmap(). There is a structure called "kbase_va_region" which contains some mapping related information.

Basically, pages are allocated in GPU side and then CPU calls kbase_mmap to get the allocated pages from GPU side. Look at kbase_reg_mmap() and kbase_gpu_mmap(). It tries to let GPU use same VA of CPU and maps the VA to PA updating MMU.

7. Address space

In this secsion, we are tyring to understand GPU address spaces used by Mali Bifrost. To this end, we dumped all the address spaces while running OpenCL vector addition.

7.1 AS information from device driver

The address space are allocated by device driver but controlled by user-space runtime. For instance, the device driver gets the FOPS and IOCTL command from user-space runtime as follows.

-

KBASE_FOPS_GET_UNMAPPED_AREA: gets the CPU address space esed by both user-space runtime and device driver -

KBASE_FOPS_MMAP: maps AS to GPU and setup the VMOPS (e.g. open, close, and fault) -

KBASE_IOCTL_MEM_ALLOC: allocates the actual physical memory (pages)

[AS:0 type: 0] start: ffff9428e000, end: ffff942ae000, flags: 606a, valid: 0, nr_pages: 32, nents: 32

ZONE_SAME_VA | CPU_WR | CPU_RD | GPU_RD | GPU_NX | GPU_CACHED |

[AS:1 type: 0] start: ffff9c03c000, end: ffff9c03d000, flags: 606e, valid: 1, nr_pages: 1, nents: 1

ZONE_SAME_VA | CPU_WR | CPU_RD | GPU_WR | GPU_RD | GPU_NX | GPU_CACHED |

[AS:2 type: 0] start: ffff9c03b000, end: ffff9c03c000, flags: 606e, valid: 1, nr_pages: 1, nents: 1

ZONE_SAME_VA | CPU_WR | CPU_RD | GPU_WR | GPU_RD | GPU_NX | GPU_CACHED |

[AS:3 type: 0] start: ffff9c03a000, end: ffff9c03b000, flags: 606e, valid: 1, nr_pages: 1, nents: 1

ZONE_SAME_VA | CPU_WR | CPU_RD | GPU_WR | GPU_RD | GPU_NX | GPU_CACHED |

[AS:4 type: 0] start: ffff9c039000, end: ffff9c03a000, flags: 206a, valid: 1, nr_pages: 1, nents: 1

ZONE_SAME_VA | CPU_WR | GPU_RD | GPU_NX | GPU_CACHED |

[AS:5 type: 2] start: ffff93800000, end: ffff93a01000, flags: 627e, valid: 1, nr_pages: 513, nents: 513

ZONE_SAME_VA | CPU_WR | CPU_RD | GPU_WR | GPU_RD | GPU_NX | CPU_CACHED | GPU_CACHED |

[AS:6 type: 2] start: ffff93a4d000, end: ffff93a8d000, flags: 627e, valid: 1, nr_pages: 64, nents: 64

ZONE_SAME_VA | CPU_WR | CPU_RD | GPU_WR | GPU_RD | GPU_NX | CPU_CACHED | GPU_CACHED |

[AS:7 type: 2] start: ffff93a0d000, end: ffff93a4d000, flags: 627e, valid: 1, nr_pages: 64, nents: 64

ZONE_SAME_VA | CPU_WR | CPU_RD | GPU_WR | GPU_RD | GPU_NX | CPU_CACHED | GPU_CACHED |

[AS:8 type: 1] start: ffff92000000, end: ffff93000000, flags: 6062, valid: 1, nr_pages: 4096, nents: 1

ZONE_SAME_VA | CPU_WR | CPU_RD | GPU_RD | GPU_CACHED |

[AS:9 type: 4] start: ffff9c038000, end: ffff9c039000, flags: 606e, valid: 1, nr_pages: 1, nents: 1

ZONE_SAME_VA | CPU_WR | CPU_RD | GPU_WR | GPU_RD | GPU_NX | GPU_CACHED |

7.2 How many address spaces?

The # of AS depends on the GPU application (e.g. how many GPU kernels are loaded or size of in/output and intermediate data). However, all the application has at least 5 ASs from AS0 to AS5 by default (pre-reserved). The purpose of them are unknown. If the in/output sizes are quite small, AS5 sometimes stores them.

7.3 Types of GPU address space

Besides the unknown, we could guess some types of ASs.

- AS for storing data: AS6 and 7 are used for in/output data

- AS for storing GPU binary: AS8 is used for GPU binary where GPU_NX (non-executable) flag is not set.

Although 4096 pages are reserved (not committed), the device driver incrementally allocates memory when a new GPU kernel is compiled and thus loaded. See

KBASE_IOCTL_MEM_COMMIT - AS for jobchain: AS9 is used for jobchain. While # of jobchain ASs is dynamic, its size is always limited to a single page. To be specific, when you have more GPU kernels and jobs, the device driver allocates more ASs of single page to store jobchains.

7.4 OpenCL

AS of which flag is 606e and nr_pages = 1 indicate it is used for job chain.

7.5 OpenGL

OpenGL has different AS layout, seems reserving some pages for job chain.

AS of which flag is 607eand nr_pages = 8 indicate it is used for job chain.

[AS:0 type: 0] start: ffff81bd7000, end: ffff81bf7000, flags: 606a, valid: 0, nr_pages: 32, nents: 32

ZONE_SAME_VA | CPU_WR | CPU_RD | GPU_RD | GPU_NX | GPU_CACHED |

[AS:1 type: 0] start: ffff82cfc000, end: ffff82cfd000, flags: 606e, valid: 1, nr_pages: 1, nents: 1

ZONE_SAME_VA | CPU_WR | CPU_RD | GPU_WR | GPU_RD | GPU_NX | GPU_CACHED |

[AS:2 type: 0] start: ffff82cdf000, end: ffff82ce0000, flags: 606e, valid: 1, nr_pages: 1, nents: 1

ZONE_SAME_VA | CPU_WR | CPU_RD | GPU_WR | GPU_RD | GPU_NX | GPU_CACHED |

[AS:3 type: 0] start: ffff82cde000, end: ffff82cdf000, flags: 606e, valid: 1, nr_pages: 1, nents: 1

ZONE_SAME_VA | CPU_WR | CPU_RD | GPU_WR | GPU_RD | GPU_NX | GPU_CACHED |

[AS:4 type: 0] start: ffff82cdd000, end: ffff82cde000, flags: 206a, valid: 1, nr_pages: 1, nents: 1

ZONE_SAME_VA | CPU_WR | GPU_RD | GPU_NX | GPU_CACHED |

[AS:5 type: 2] start: ffff81b97000, end: ffff81bd7000, flags: 607e, valid: 0, nr_pages: 64, nents: 64

ZONE_SAME_VA | CPU_WR | CPU_RD | GPU_WR | GPU_RD | GPU_NX | CPU_CACHED | GPU_CACHED |

[AS:6 type: 0] start: ffff81b57000, end: ffff81b97000, flags: 606e, valid: 1, nr_pages: 64, nents: 64

ZONE_SAME_VA | CPU_WR | CPU_RD | GPU_WR | GPU_RD | GPU_NX | GPU_CACHED |

[AS:7 type: 0] start: ffff81b4a000, end: ffff81b57000, flags: 206c, valid: 0, nr_pages: 13, nents: 13

ZONE_SAME_VA | GPU_WR | GPU_RD | GPU_NX | GPU_CACHED |

[AS:8 type: 1] start: ffff77000000, end: ffff78000000, flags: 6062, valid: 1, nr_pages: 4096, nents: 1

ZONE_SAME_VA | CPU_WR | CPU_RD | GPU_RD | GPU_CACHED |

[AS:9 type: 4] start: ffff82cdc000, end: ffff82cdd000, flags: 606a, valid: 1, nr_pages: 1, nents: 1

ZONE_SAME_VA | CPU_WR | CPU_RD | GPU_RD | GPU_NX | GPU_CACHED |

[AS:10 type: 0] start: ffff81b0a000, end: ffff81b4a000, flags: 626e, valid: 1, nr_pages: 64, nents: 64

ZONE_SAME_VA | CPU_WR | CPU_RD | GPU_WR | GPU_RD | GPU_NX | GPU_CACHED |

[AS:11 type: 0] start: ffff81aca000, end: ffff81b0a000, flags: 606e, valid: 1, nr_pages: 64, nents: 64

ZONE_SAME_VA | CPU_WR | CPU_RD | GPU_WR | GPU_RD | GPU_NX | GPU_CACHED |

[AS:12 type: 2] start: ffff82cd4000, end: ffff82cdc000, flags: 607e, valid: 1, nr_pages: 8, nents: 8

ZONE_SAME_VA | CPU_WR | CPU_RD | GPU_WR | GPU_RD | GPU_NX | CPU_CACHED | GPU_CACHED |

[AS:13 type: 2] start: ffff81ac2000, end: ffff81aca000, flags: 627e, valid: 1, nr_pages: 8, nents: 8

ZONE_SAME_VA | CPU_WR | CPU_RD | GPU_WR | GPU_RD | GPU_NX | CPU_CACHED | GPU_CACHED |

[tgx_dump_sync_as] total count of sync_as: 2

The dumped one is from when running gc_absdiff and both AS12 and 13 seem to be used for job chains. Here is the content of job chain:

ffff82cd4000 | 00 00 00 00 00 00 00 00 00 00 00 00 00 00 00 00

09 00 02 00 01 00 00 00 00 00 00 00 00 00 00 00

B8 38 00 00 00 00 86 23 00 00 00 1C 00 00 00 00

00 00 00 00 00 00 00 00 00 00 00 00 00 00 00 00

02 00 00 00 00 00 00 00 00 00 00 00 00 00 00 00

00 00 00 00 00 00 00 00 C0 40 CD 82 FF FF 00 00

00 00 00 00 00 00 00 00 00 00 00 00 00 00 00 00

00 20 AC 81 FF FF 00 00 C0 CF CD 82 FF FF 00 00

00 00 00 00 00 00 00 00 00 00 00 00 00 00 00 00

00 00 00 00 00 00 00 00 00 00 00 00 00 00 00 00

00 00 00 00 00 00 00 00 00 00 00 00 00 00 00 00

40 42 CD 82 FF FF 00 00 00 00 00 00 00 00 00 00

07 D0 40 CD 82 FF FF 00 05 00 A0 AC 81 FF FF 00

80 34 B2 81 FF FF 00 00 8A 32 00 00 00 00 00 00

40 6A B1 81 FF FF 00 00 8A 32 00 00 00 00 00 00

00 A0 B0 81 FF FF 00 00 8A 32 00 00 00 00 00 00

00 20 AC 81 FF FF 00 00 00 00 00 00 00 00 00 00

00 00 00 00 00 00 00 00 00 00 00 00 00 00 00 00

00 00 00 00 00 00 00 00 00 00 00 00 00 00 00 00

00 00 00 00 00 00 00 00 00 00 00 00 00 00 00 00

00 00 00 00 00 00 00 00 00 00 00 00 00 00 00 00

00 00 00 00 00 00 00 00 00 00 00 00 00 00 00 00

00 00 00 00 00 00 00 00 00 00 00 00 00 00 00 00

00 00 00 00 00 00 00 00 00 00 00 00 00 00 00 00

00 00 00 00 00 00 00 00 00 00 00 00 00 00 00 00

09 00 01 00 00 00 00 00 00 40 CD 82 FF FF 00 00

00 00 00 00 00 00 40 00 00 00 00 14 00 00 00 00

00 00 00 00 00 00 00 00 00 00 00 00 00 00 00 00

02 00 00 00 00 00 00 00 00 00 00 00 00 00 00 00

00 00 00 00 00 00 00 00 C0 40 CD 82 FF FF 00 00

00 00 00 00 00 00 00 00 00 00 00 00 00 00 00 00

00 20 AC 81 FF FF 00 00 80 CF CD 82 FF FF 00 00

00 00 00 00 00 00 00 00 00 00 00 00 00 00 00 00

00 00 00 00 00 00 00 00 00 00 00 00 00 00 00 00

00 00 00 00 00 00 00 00 00 00 00 00 00 00 00 00

40 42 CD 82 FF FF 00 00 00 00 00 00 00 00 00 00

00 00 00 00 1F 00 00 00 00 A0 B4 81 FF FF 00 00

00 00 00 00 00 00 00 00 00 00 00 00 00 00 00 00

ffff81ac2000 | 00 00 00 00 00 00 00 00 00 00 00 00 00 00 00 00

00 00 00 00 00 00 00 00 00 00 00 00 00 00 00 00

00 00 00 00 00 00 00 00 00 00 00 00 00 00 00 00

00 00 00 00 00 00 00 00 00 00 00 00 00 00 00 00

00 20 AC 81 FF FF 00 00 40 6A B1 81 FF FF 00 00

00 A0 B0 81 FF FF 00 00 80 34 B2 81 FF FF 00 00

00 00 00 00 00 00 00 00 00 00 00 00 00 00 00 00

00 00 00 00 00 00 00 00 00 00 00 00 00 00 00 00

00 00 00 00 00 00 00 00 00 00 00 00 00 00 00 00

00 00 00 00 00 00 00 00 00 00 00 00 00 00 00 00

00 00 00 00 00 00 00 00 00 00 00 00 00 00 00 00

00 00 00 00 00 00 00 00 00 00 00 00 00 00 00 00

00 00 00 00 00 00 00 00 00 00 00 00 00 00 00 00

00 00 00 00 00 00 00 00 00 00 00 00 00 00 00 00

00 00 00 00 00 00 00 00 00 00 00 00 00 00 00 00

8. Misc

8.1 # of shader cores (regarding MP)

- You can check # of share cores via clinfo, or check out the raw GPU stat from device driver.

void kbase_gpuprops_set(struct kbase_device *kbdev)

{

struct kbase_gpu_props *gpu_props;

struct gpu_raw_gpu_props *raw;

KBASE_DEBUG_ASSERT(NULL != kbdev);

gpu_props = &kbdev->gpu_props;

raw = &gpu_props->props.raw_props;

/* Initialize the base_gpu_props structure from the hardware */

kbase_gpuprops_get_props(&gpu_props->props, kbdev);

/* Populate the derived properties */

kbase_gpuprops_calculate_props(&gpu_props->props, kbdev);

/* Populate kbase-only fields */

gpu_props->l2_props.associativity = KBASE_UBFX32(raw->l2_features, 8U, 8);

gpu_props->l2_props.external_bus_width = KBASE_UBFX32(raw->l2_features, 24U, 8);

gpu_props->mem.core_group = KBASE_UBFX32(raw->mem_features, 0U, 1);

gpu_props->mmu.va_bits = KBASE_UBFX32(raw->mmu_features, 0U, 8);

gpu_props->mmu.pa_bits = KBASE_UBFX32(raw->mmu_features, 8U, 8);

gpu_props->num_cores = hweight64(raw->shader_present);

gpu_props->num_core_groups = hweight64(raw->l2_present);

gpu_props->num_address_spaces = hweight32(raw->as_present);

gpu_props->num_job_slots = hweight32(raw->js_present);

}

- NP means multiple processing and hence represents # of cores the GPU is quipped with (e.g. Bifrost G71-mp8 has 8 shader cores)

- However, there are some odd products (e.g. G31). See the answer from Arm